Samsung Electronics shares jumped 5.4% on Monday following confirmation of HBM4 mass production starting this month. The Korean chipmaker secured Nvidia's Vera Rubin AI accelerator supply contract, edging out competitor Micron Technology.

Industry sources told Yonhap News Agency that Samsung will begin shipping sixth-generation high-bandwidth memory chips to Nvidia next week. Production matches Nvidia's launch schedule for its next-generation AI platform, named Vera Rubin after the American astronomer.

Micron stock dropped 3.2% on the same day. Semiconductor analysis firm SemiAnalysis revised Micron's HBM4 share in Nvidia's Vera Rubin chips to zero percent.

"There are no signs Nvidia will order Micron's HBM4," the firm stated.

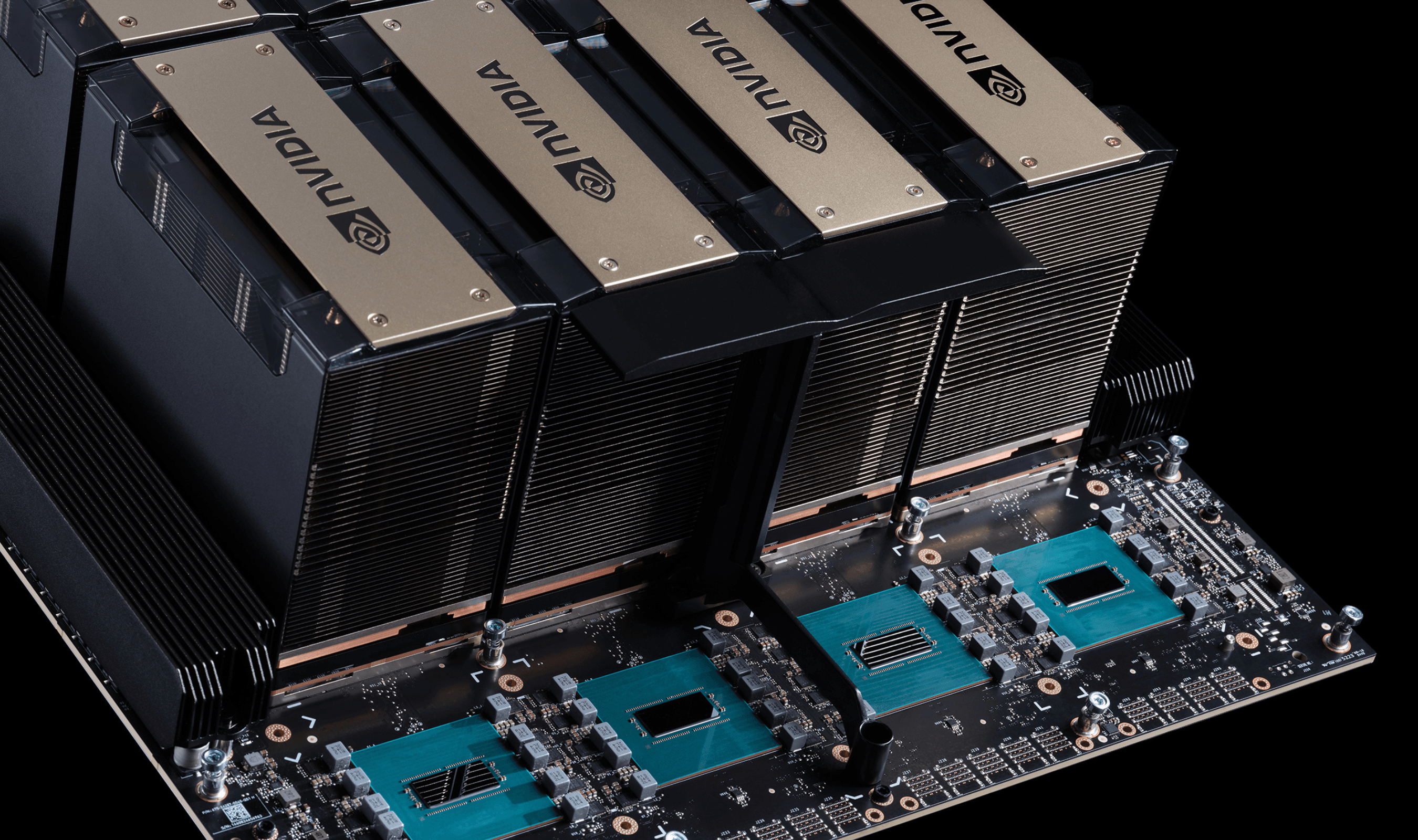

Nvidia raised HBM4 data transfer speed requirements to at least 11 gigabits per second in the third quarter of 2025. While Micron publicly claimed 11 Gbps capability, industry sources indicate the company struggled to meet this performance threshold.

Samsung and SK Hynix will supply all HBM4 chips to Nvidia, according to multiple reports. The two Korean manufacturers split the contract with SK Hynix taking 70% and Samsung securing 30% of shipments.

Samsung passed Nvidia's quality certification process and received purchase orders earlier this month. The company plans to use its position as the only semiconductor manufacturer offering solutions across logic, memory, foundry, and packaging.

HBM4 chips reportedly achieve data processing speeds up to 11.7 Gbps, exceeding industry standards by 22% over previous-generation HBM3E chips. The technology represents the sixth generation of high-bandwidth memory designed specifically for AI workloads.

Samsung's stock had already gained more than 30% this year before Monday's rally. Memory chip prices climbed throughout early 2026 as hyperscalers including Amazon and Alphabet invested billions in AI infrastructure development.

Four major hyperscalers plan to spend approximately $650 billion on AI infrastructure this year. This spending contributed to Nvidia's 8% stock increase on Friday, creating positive momentum for its supply chain partners.

The HBM market currently operates on fifth-generation HBM3E technology, where SK Hynix maintains market leadership. Samsung's HBM4 production marks its attempt to close the technology gap with its domestic rival.

Samsung will begin mass shipments during the third week of February, according to Yonhap. The timing coincides with the Lunar New Year holiday period in East Asia.

Micron CEO Sanjay Mehrotra previously indicated his company would ramp up HBM4 production during the second quarter of 2026. Samsung's accelerated timeline gives the Korean manufacturer competitive advantage in supplying chips to AI hardware developers.

Samsung shares reached 168,700 won by 01:18 GMT on the Seoul stock exchange. The rally reflects growing investor confidence in Samsung's position within the expanding AI semiconductor market.

High-bandwidth memory enables faster data processing in AI accelerators by stacking memory chips vertically. This architecture provides greater bandwidth than traditional memory configurations, making it essential for generative AI systems.

Samsung's production capacity and product variety position the company to capitalize on rising AI memory demand.

Having demonstrated its technological recovery by becoming first to mass-produce highest-performing HBM4, the company will begin shipping these sixth-generation high-bandwidth memory chips to Nvidia next week.