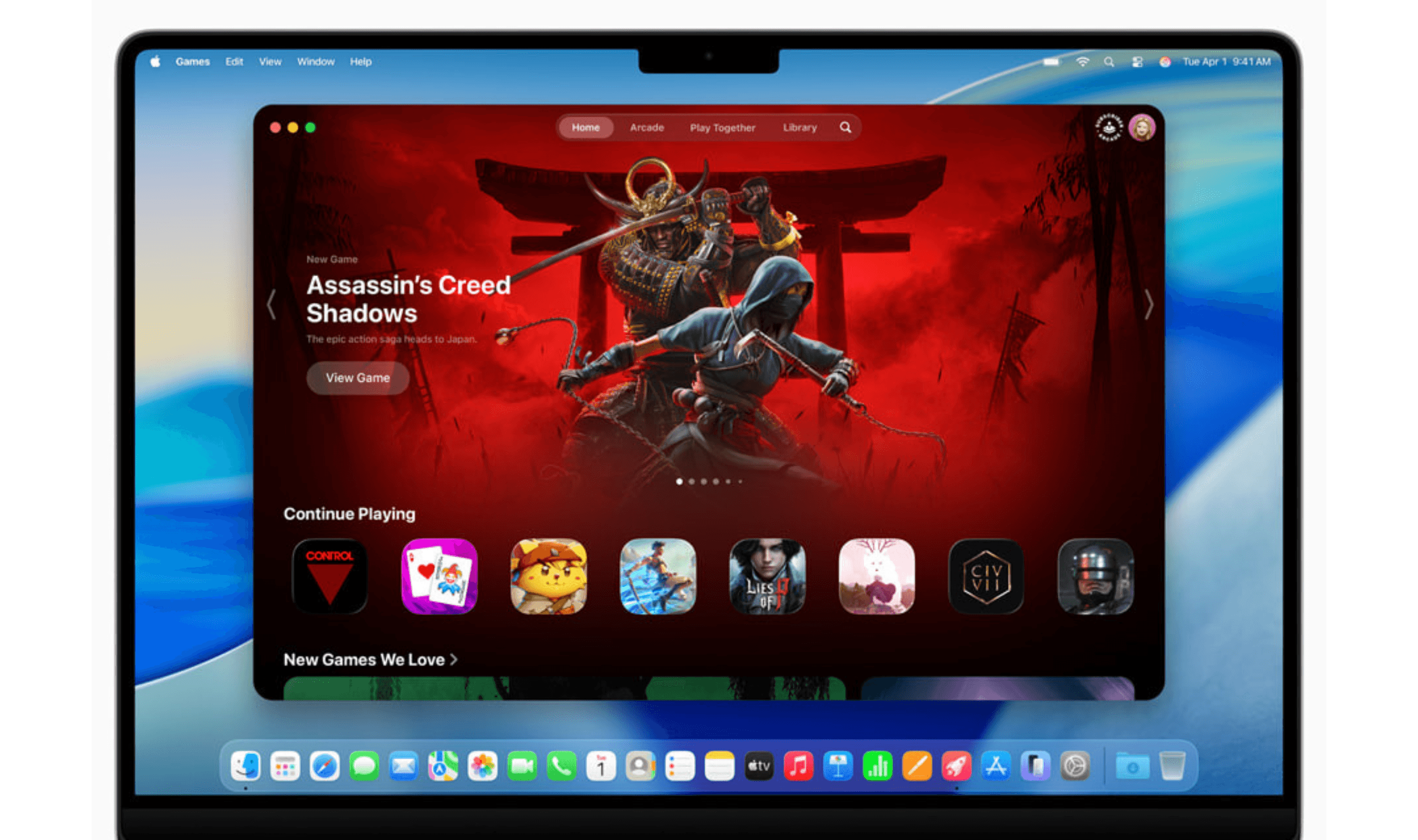

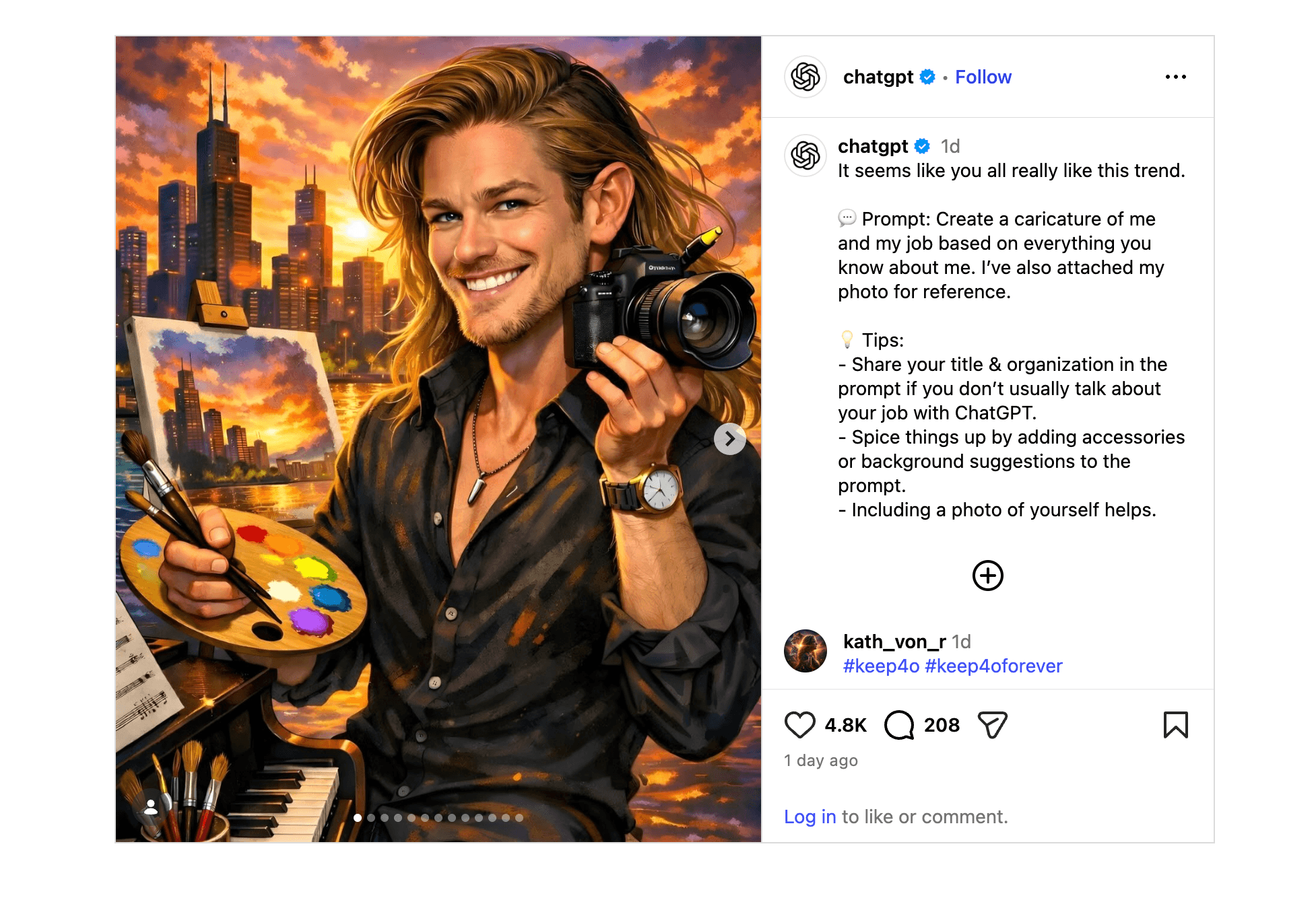

ChatGPT's viral caricature trend exposes privacy risks as users flood social media with AI-generated portraits. The prompt "create a caricature of me and my job based on everything you know about me" has become the first major ChatGPT trend of 2026, following 2025's action figure frenzy.

Users worldwide transform themselves into illustrated hairdressers, stylists, chefs, and doctors based on professions and hobbies. The AI generates unique caricatures from uploaded photos and chat history, with results appearing across Instagram, LinkedIn, WhatsApp, and X.

Cybersecurity experts warn the trend represents normalization of data oversharing.

"In under an hour, an AI can accumulate enough data to convincingly impersonate someone," said Dr. Janet Bastiman, Chief Data Scientist of Napier AI.

Users expose themselves to identity misuse, fraud, and reputational harm by feeding personal information into large language models.

The caricature accuracy depends on interaction history. Users with extensive chat logs receive more detailed results featuring background elements like books with specific titles, editing software interfaces, and fantasy football statistics.

Those lacking prior information must provide clear instructions for precise images.

Matt Conlon, CEO of security firm Cytidel, compared the trend to early social media oversharing.

"Users are actively feeding increasingly detailed personal information into generative models to 'improve' the output," he said. "If the result isn't accurate, they simply add more context, effectively training an AI system with highly personal data."

OpenAI's privacy policy allows submitted content to improve services and products, with broad sharing permitted across affiliates. Once caricatures post online, they can be copied or scraped beyond user control.

Third-party cartoon apps saw downloads surge 221% during the trend's initial week. Two popular applications gained over 245,000 additional downloads within a single three-day window as participation peaked.

Digital sociologist Jessamy Perriam described the trend as "fun but eerie at the same time." She questioned how much users want ChatGPT to know about them, noting uncertainty about whether disclosed information remains siloed or gets used in other scenarios.

Australian data shows 78% of citizens worry about AI's potential negative outcomes. Social media reactions range from enthusiasm to concern, with one user stating, "Can't believe people are willingly feeding their private infos to ChatGPT for a stupid trend!"

Privacy mitigation options include opting out of model training, using temporary chat sessions, and disabling memory features. Oliver Simonnet, lead cybersecurity researcher at CultureAI, advised following a simple rule: "If you would not share it publicly, you probably want to avoid including it in an AI prompt."

The trend highlights AI's expanding role in digital creativity while raising questions about data security as increasingly personalized artificial intelligence.