ByteDance launched Seedance 2.0 on February 7, a new AI video generation model that produces multi-scene narratives with synchronized audio. The system generates 2K video approximately 30% faster than competing models like Kling, according to CNBC TV18.

Seedance 2.0's headline feature is "multi-lens storytelling," which creates several connected scenes while maintaining consistent characters and visual style. This approach reduces manual editing work typically required to stitch together individual AI-generated clips.

The model supports eight languages including Russian and English, and accepts text, images, short videos, or audio as input references.

The neural network is currently available through the Chinese version of ByteDance's Dreamina service, accessible via Douyin accounts. By the end of February, Seedance 2.0 will expand to third-party platforms including CapCut, Higgsfield, and Imagine.Art, according to ForkLog.

Early testers reported "incredibly natural, almost professional" transitions between scenes. One user with the nickname el.cine described features that "seem illegal," noting the system generates complete scenes with visual effects, sound, voices, and music from uploaded scripts.

The launch triggered immediate market reactions. COL Group shares hit the 20% daily trading limit, while Shanghai Film and Perfect World each rose 10%. Analysts at Kaiyuan Securities called the product a potential "singularity moment" for AI in screen-based content creation.

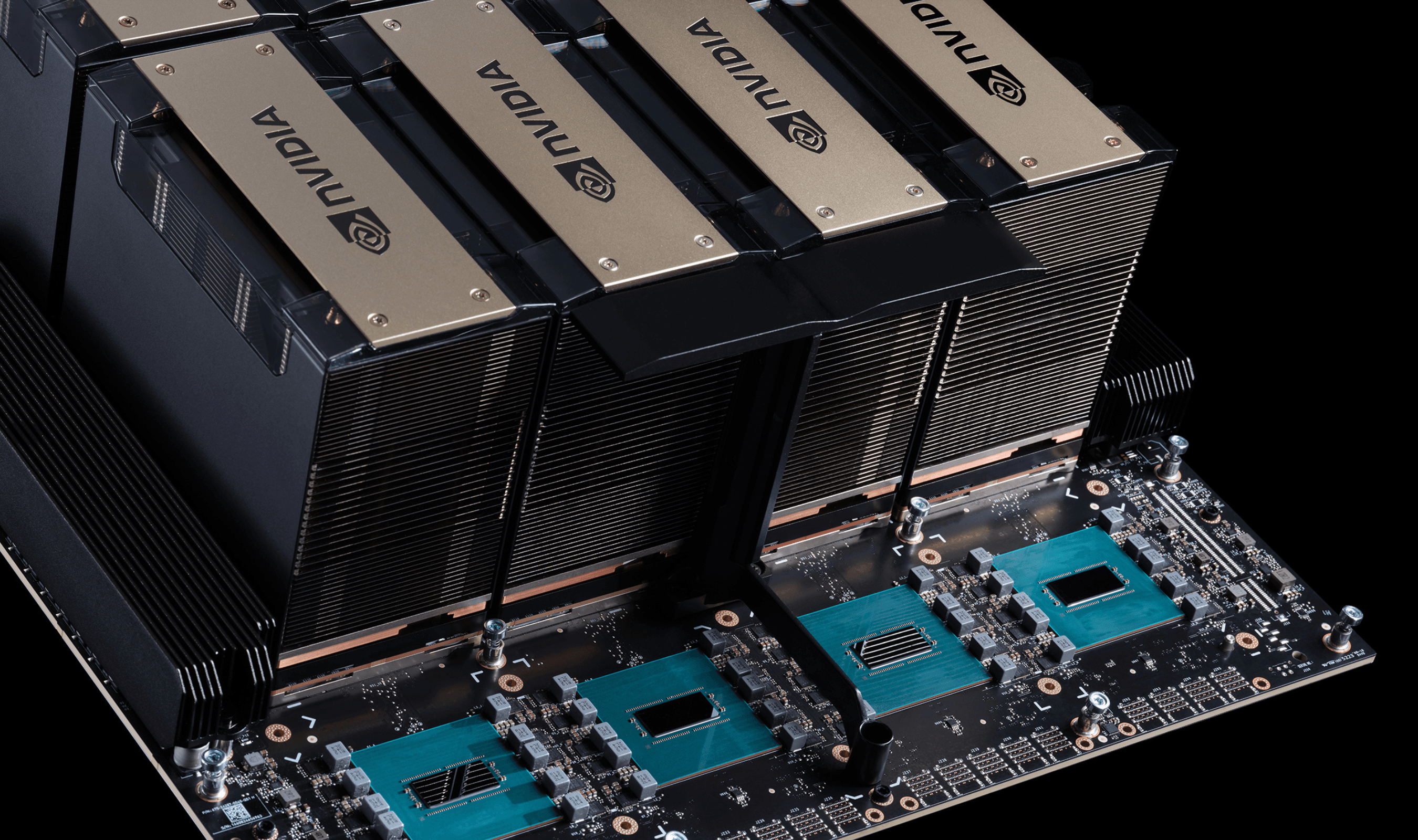

The stock surge follows similar investor enthusiasm for AI semiconductor stocks like Montage Technology, which saw its shares jump 64% in its Hong Kong IPO debut earlier this year.

Seedance 2.0 arrives as Chinese tech firms accelerate AI video development. Kuaishou Technology's Kling AI model now has over 22 million global users who generated 168 million video clips and 344 million images. ByteDance's release follows its earlier video generators PixelDance and Seaweed, which debuted in private beta last year.

The model uses a dual-branch diffusion transformer structure that produces video and audio simultaneously rather than layering them separately. This native co-generation approach eliminates the audio-video synchronization issues common in earlier AI video systems.

"AI is an incredible opportunity to help creators improve films, particularly in pre-visualization and visual effects."

Netflix co-CEO Ted Sarandos made this statement, weighing in on AI's role in film production. Director James Cameron described technology that imitates creative thinking as "interesting," reflecting processes artists already use mentally.

Seedance 2.0's limited beta is currently accessible to select users of Jimeng AI, ByteDance's AI video platform. The company plans broader availability as it competes in China's intensifying AI video generation race.