A 77.1% score on the ARC-AGI-2 benchmark marks Google's latest AI model as more than twice as capable at logical reasoning than its predecessor released just three months ago.

Gemini 3.1 Pro delivers what Google calls "a step forward in core reasoning," achieving a verified 77.1% on the ARC-AGI-2 test that evaluates solving entirely new logic patterns. The previous Gemini 3 Pro managed only 31.1% on the same benchmark.

This mid-cycle update arrives exactly three months after Gemini 3 Pro launched in November. It represents Google's first use of a ".1" version increment rather than waiting for mid-year updates, signaling faster iteration cycles in AI development.

The model targets complex problem-solving where simple answers aren't sufficient. It handles tasks like generating visual explanations of dense topics, synthesizing large datasets into unified views, and supporting creative workflows with advanced reasoning techniques.

Starting today, Gemini 3.1 Pro rolls out through the Gemini app to all users, though Google AI Pro and Ultra subscribers receive higher usage limits. NotebookLM access remains exclusive to Pro and Ultra subscribers, while developers can preview the model through Gemini API in Google AI Studio, Vertex AI, and Android Studio.

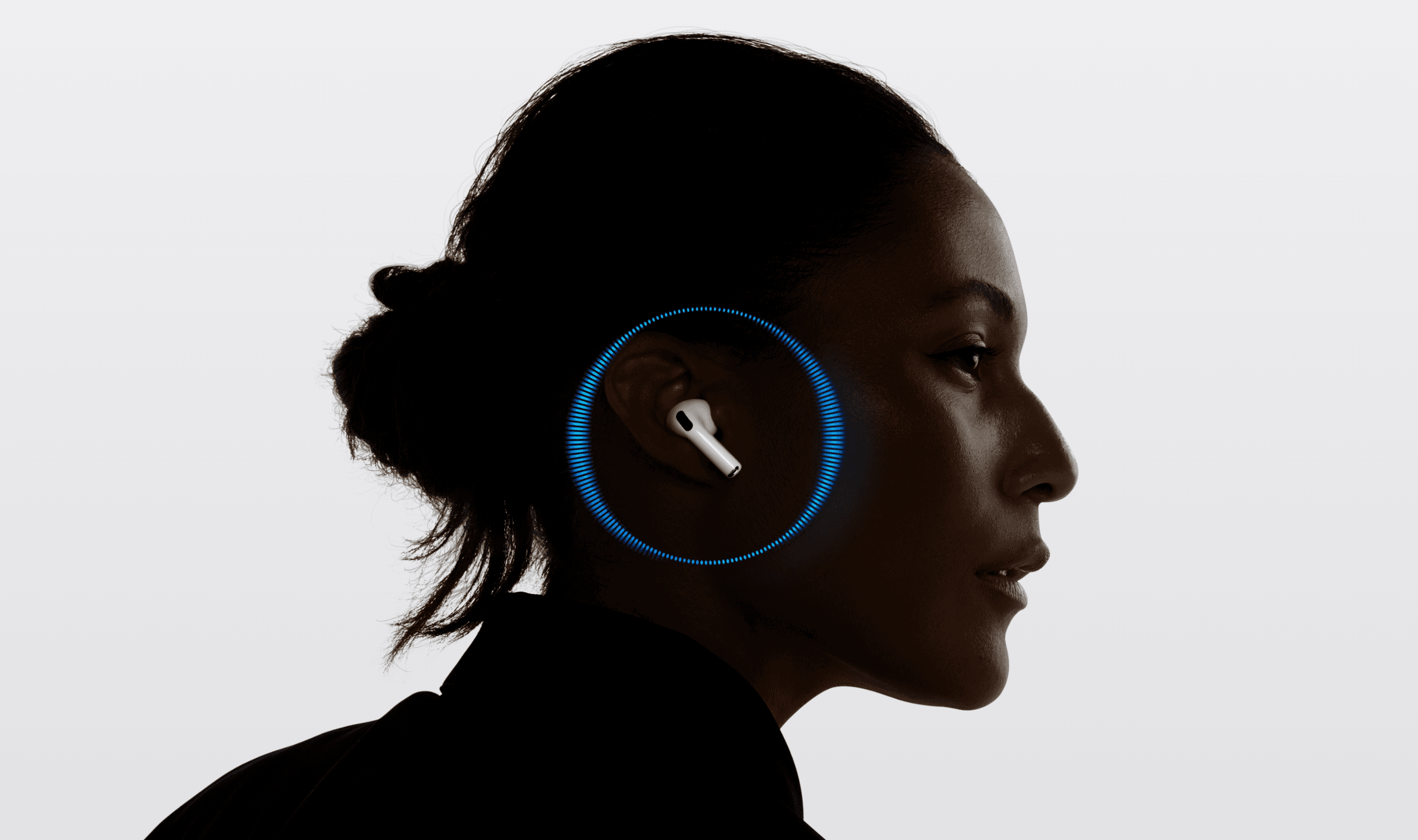

Beyond Android users, the upgrade carries implications for Apple's ecosystem following January's announcement of a multi-year deal between the companies to power Siri with Gemini technology. Bloomberg reports Apple plans to debut Gemini-powered Siri features in iOS 26.4 this month.

On competitive benchmarks, Gemini 3.1 Pro now leads the Artificial Analysis Intelligence Index by four points over Claude Opus 4.6 and tops the APEX-Agents leaderboard for real professional task performance. According to Mercor CEO Brendan Foody,

"It also completes five tasks that no model has ever been able to do before."

Google describes this version as launching in preview while validating updates before general availability arrives soon.