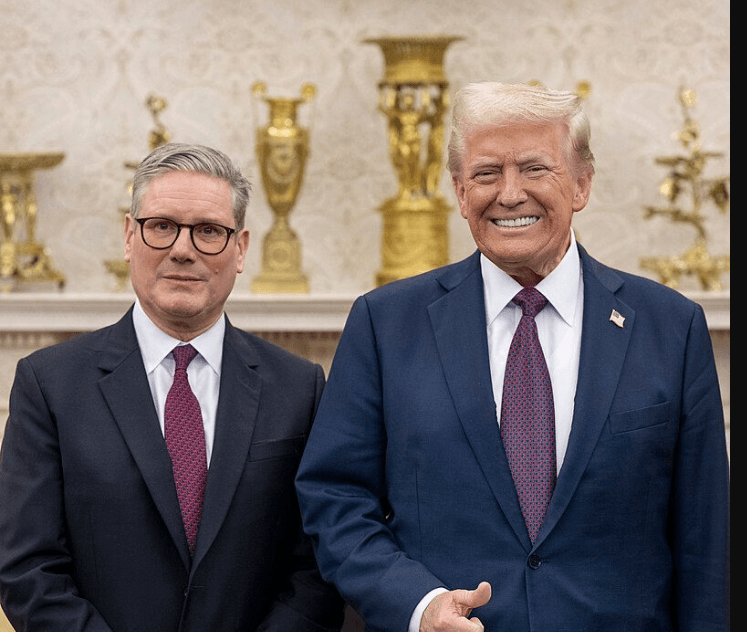

While tech headlines buzz about ChatGPT hitting 700 million weekly users and Trump's $500 billion Stargate AI infrastructure project, a quieter diplomatic story has been unfolding that could prove far more consequential for how we develop artificial intelligence. The US and UK have been quietly aligning their AI strategies in ways that reject the global consensus - and it's starting to reshape the entire landscape of AI governance.

That alignment just crystallized into concrete action: £150 billion in US investment pledges and a formal Tech Prosperity Deal signed at Chequers.

The most telling moment came in February at the Paris AI Action Summit, where around 60 countries signed a declaration calling for AI development that is "open, inclusive, transparent, ethical, safe, secure and trustworthy." Two notable holdouts? The United States and United Kingdom. Their coordinated refusal wasn't just diplomatic theater - it signals a fundamental shift in how the world's AI powerhouses want to build the future.

JD Vance, making his first international trip as Vice President, didn't mince words about why America walked away. Speaking to delegates in Paris, he warned that "excessive regulation of the AI sector could kill a transformative industry just as it's taking off." The message was clear: while Europe wants to regulate first and innovate second, the US-UK axis is betting on the opposite approach.

This isn't just about signing ceremonies. Behind the scenes, these two nations have been building what amounts to a parallel AI governance structure that prioritizes "pro-growth AI policies" over the safety-first approach that dominated the UK's own AI Safety Summit just over a year ago. The irony is striking - Britain, which hosted the world's first major AI safety summit under Rishi Sunak, is now stepping back from that very framework.

The real AI alliance taking shape

The partnership materialized into hard commitments this week. Microsoft pledged £22 billion over four years, Google committed £5 billion for data center expansion in Hertfordshire, and Blackstone announced a staggering £90 billion investment over the next decade - though details on deployment remain vague. The Tech Prosperity Deal, signed Thursday at Chequers, formalizes what had been brewing behind the scenes: a technology partnership spanning AI, quantum computing, and nuclear energy.

The substantive cooperation goes deeper than symbolic rejections. In April 2024, the US and UK signed the first bilateral agreement specifically focused on testing advanced AI systems together. This partnership isn't just about sharing research - it's about ensuring their respective AI Safety Institutes can work in lockstep to evaluate the large language models that underpin everything from ChatGPT to Google's Gemini.

What makes this significant is the institutional weight behind it. The UK's AI Safety Institute has emerged as arguably the world's most advanced research body specifically focused on AI evaluation, while the US brings massive computational resources and direct access to the tech giants developing frontier AI models. Together, they're creating evaluation standards that could become the de facto global benchmarks.

But there's a catch that industry insiders are whispering about. According to sources familiar with the UK government's thinking, American AI firms have quietly suggested they might stop engaging with Britain's AI Safety Institute if the UK takes what they perceive as an "overly restrictive approach" to AI development. It's a not-so-subtle form of economic diplomacy that helps explain why both countries are now singing from the same regulatory songbook.

The Trump administration's approach amplifies this dynamic. With Trump announcing the massive Stargate venture involving OpenAI, SoftBank, and Oracle - promising to create over 100,000 jobs and outpace rival nations in AI development - the pressure on allies to choose sides is intensifying. The UK appears to have made its choice.

This shift hasn't gone unnoticed by AI researchers and policy experts. Gaia Marcus from the Ada Lovelace Institute expressed hope that the UK's move wasn't "a decision to reject the vital global governance that AI needs." Meanwhile, industry groups like UKAI (the UK's AI trade association) cautiously welcomed the government's refusal to sign international agreements, seeing it as an opportunity to "work closely with our US partners" on more business-friendly approaches.

The numbers tell part of the story. Beyond Alphabet's projected $85 billion in 2025 capital expenditures and Meta's $66-72 billion guidance, the new US commitments to the UK dwarf anything Europe can muster. With Blackstone alone pledging £90 billion over a decade - albeit with few specifics - and tech giants like Microsoft (£22bn) and Google (£5bn) making concrete infrastructure commitments, the UK has effectively chosen its side in the AI race.

What's emerging is a three-way split in global AI governance that will likely define the next decade of technology development. Europe is doubling down on its AI Act - comprehensive regulations that classify AI systems by risk levels and impose strict requirements on high-risk applications. China continues expanding access through state-backed tech companies while maintaining tight political control. And now the US-UK partnership is carving out a third path: lighter regulation, heavier industry collaboration, and a bet that innovation will solve safety problems better than rules will.

Yet this pivot comes with complications. While US firms pledge billions, UK pharmaceutical giants are heading the opposite direction. AstraZeneca paused £200 million in Cambridge investment plans, Merck redirected £1 billion to the US, and GSK is investing nearly £22 billion stateside. The irony is striking: as American tech money flows in, British life sciences investment flows out, raising questions about whether the UK is trading one form of economic dependency for another.

The implications stretch far beyond regulatory frameworks. When the UK steps back from international AI safety commitments while deepening ties with US companies, it's essentially choosing to let market forces and bilateral cooperation guide AI development rather than multilateral treaties. For developers and companies building AI systems, this creates a clearer path forward - at least in the Anglo-American sphere.

The transformation is already visible in how both governments talk about AI. Where previous UK communications emphasized "responsible innovation" and "trustworthy AI," recent statements focus on "pro-growth policies" and "not squandering opportunities." It's a rhetorical shift that reflects deeper changes in how these nations view the balance between innovation and regulation.

For the global AI market, this US-UK alignment could prove decisive. When the world's most advanced AI research institution (UK) pairs with the world's dominant AI industry (US), they're not just sharing technology - they're setting the standards that other nations will have to respond to. Countries looking to participate in cutting-edge AI development may find themselves choosing between European-style regulation and Anglo-American market-driven approaches.

Critics aren't staying quiet. Former Deputy PM Nick Clegg, once Facebook's president of global affairs, dismissed the investments as 'crumbs from the Silicon Valley table,' warning that the UK's 'perennial Achilles heel' remains unaddressed - British startups still end up in the US as they seek growth capital. As finance writer Yves Smith noted at Naked Capitalism, the broader trade deal suggests the UK 'gave away a lot' while still ending up with 11% effective tariffs. The fundamental question persists: is the UK building its own AI future or merely hosting America's?

Read also - The Trillion-Dollar AI Bubble Nobody Sees Coming

The deal that started in quiet coordination between AI safety institutes has now emerged into the open with the Tech Prosperity Deal, yet its most significant aspects remain unspoken - the shared rejection of international frameworks both nations now view as potentially stifling innovation. In an industry where technological leadership often determines regulatory influence, this partnership could reshape not just how we build AI, but who gets to decide the rules for everyone else.

If you enjoyed this guide, follow us for more.