Nvidia launched its Rubin AI platform at CES 2026 on Monday, promising 10x lower inference costs and a new storage architecture for agentic AI workloads. The six-chip system enters full production immediately with partner availability scheduled for the second half of 2026.

The Rubin platform delivers inference tokens at one-tenth the cost of Nvidia's previous Blackwell generation while requiring four times fewer GPUs to train mixture-of-experts models. Microsoft will deploy Vera Rubin NVL72 rack-scale systems in its Fairwater AI superfactories, scaling to hundreds of thousands of superchips across next-generation data centers.

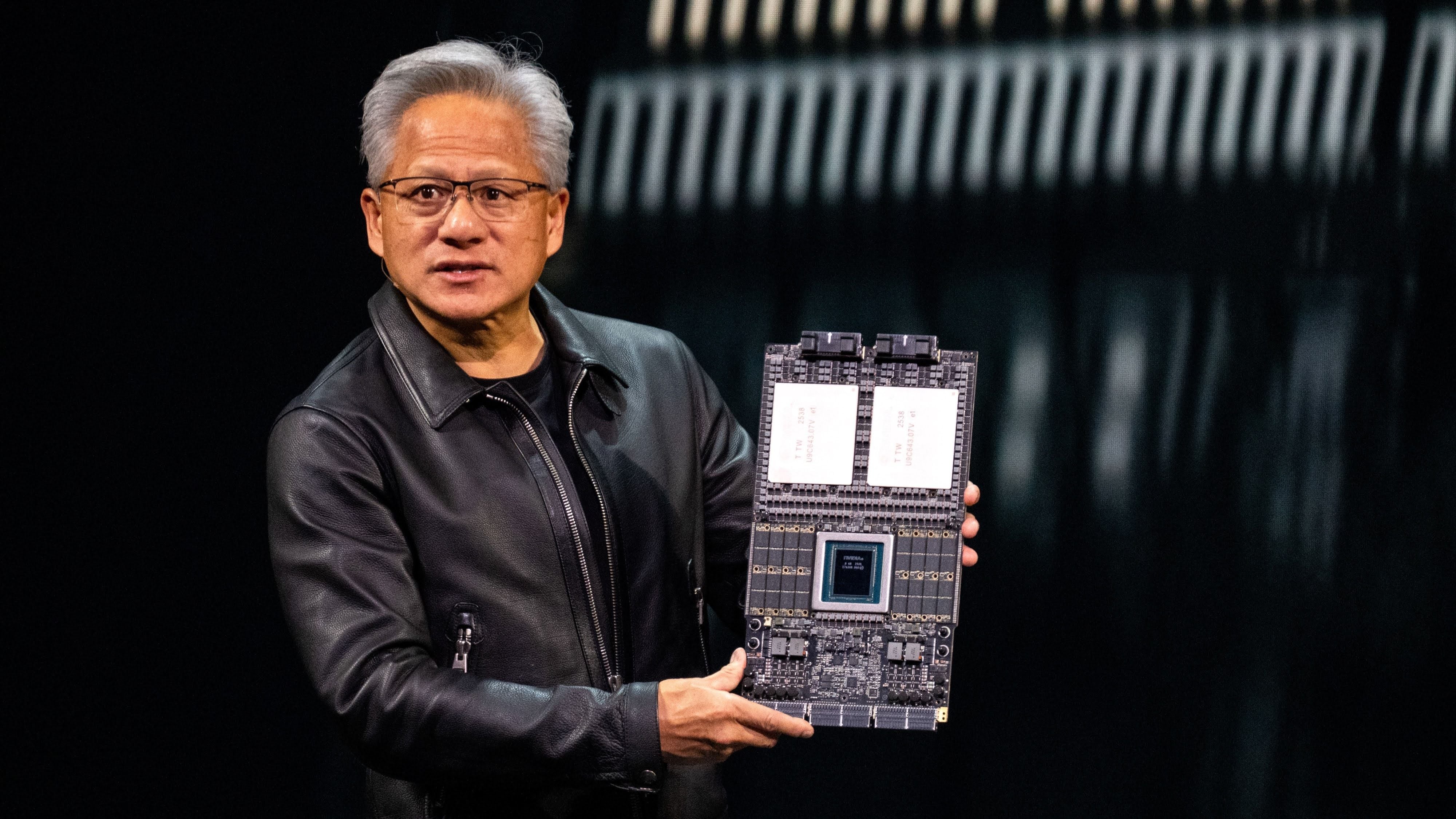

Nvidia's extreme codesign approach integrates six new chips: the Vera CPU, Rubin GPU, NVLink 6 Switch, ConnectX-9 SuperNIC, BlueField-4 DPU, and Spectrum-6 Ethernet Switch. The Vera Rubin NVL72 rack combines 72 Rubin GPUs with 36 Vera CPUs, delivering 260TB/s of bandwidth - more than the entire global internet, according to company specifications.

Each Rubin GPU achieves 50 petaflops of NVFP4 compute for AI inference, five times faster than Blackwell. The Vera CPU features 88 custom Olympus cores with 1.5TB of LPDDR5x memory and introduces rack-scale confidential computing that maintains data security across CPU, GPU, and NVLink domains.

The platform's Inference Context Memory Storage Platform addresses agentic AI's memory bottleneck by moving key-value cache data from GPUs to shared AI-native storage. Powered by BlueField-4, this architecture delivers five times higher tokens per second with five times better power efficiency than traditional storage, according to Nvidia.

Major cloud providers including AWS, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure will deploy Rubin-based instances in 2026. CoreWeave, Lambda, Nebius, and Nscale join as first-wave NVIDIA Cloud Partners, with CoreWeave integrating Rubin systems through its Mission Control operating standard.

AI labs across the industry have committed to Rubin adoption. OpenAI CEO Sam Altman stated the platform helps "keep scaling progress so advanced intelligence benefits everyone," while Anthropic's Dario Amodei cited efficiency gains enabling "longer memory, better reasoning and more reliable outputs."

Meta's Mark Zuckerberg called Rubin "the step-change in performance and efficiency required to deploy the most advanced models to billions of people." Elon Musk described the platform as "a rocket engine for AI" that reinforces Nvidia's position as the "gold standard" for frontier model infrastructure.

The Rubin announcement arrives amid industry-wide memory shortages, with data center projects consuming roughly 40% of global DRAM output according to Tom's Hardware. Nvidia's storage platform directly addresses this constraint by treating memory as a first-class design element rather than computational byproduct.

Nvidia's accelerated production timeline - moving from Blackwell Ultra shipments in late 2025 to Rubin full production in Q1 2026 - demonstrates what Wccftech described as Jensen Huang's "fast and lethal" AI strategy. The company reportedly expects $500 billion in combined Blackwell and Rubin revenue between 2025 and 2026.

Competitive pressure from AMD's Helios rack systems, announced last spring and expected later this year, may have influenced Nvidia's early CES reveal. AMD promises equivalent floating point performance with 50% more HBM4 capacity per GPU socket, though Nvidia counters with faster 22TB/s HBM4 bandwidth achieved through silicon improvements.

The Rubin platform introduces third-generation confidential computing alongside a second-generation RAS Engine for reliability, availability, and serviceability. Modular, cable-free tray design enables 18 times faster assembly and servicing than Blackwell, while NVLink Intelligent Resiliency allows zero-downtime maintenance.

Nvidia expanded its Red Hat collaboration to deliver a complete AI stack optimized for Rubin, including Red Hat Enterprise Linux, OpenShift, and Red Hat AI. The partnership targets Fortune Global 500 companies already using Red Hat's hybrid cloud portfolio.

Beyond data center infrastructure, Nvidia announced physical AI developments including Cosmos world models, GR00T humanoid models, and Alpamayo vision-language-action models for autonomous vehicles. These systems aim to reduce robotics training friction and address the "long tail" of rare driving scenarios.

The Rubin platform represents Nvidia's third-generation rack-scale architecture, with more than 80 MGX ecosystem partners. As AI workloads shift from single-turn queries to multi-step agentic reasoning, Nvidia positions Rubin as infrastructure capable of managing context at gigascale while maintaining security and efficiency.

Industry adoption begins immediately, with Cisco, Dell, HPE, Lenovo, and Supermicro expected to deliver Rubin-based servers. Storage partners including NetApp, Pure Storage, VAST Data, and WEKA are designing next-generation platforms for Rubin infrastructure deployment.