Apple's long-rumored smart glasses appear to be nearing reality, with multiple reports suggesting a 2026 announcement could be imminent.

Bloomberg's Mark Gurman reports that Apple is planning to launch its first smart glasses as an iPhone accessory focused on AI features rather than augmented reality displays, positioning them as a direct competitor to Meta's popular Ray-Ban smart glasses.

Latest Updates

- January 2026: Apple is reportedly planning a major product refresh cycle for 2026, which includes AR glasses alongside dozens of other new products

- December 2025: 9to5Mac publishes comprehensive feature rundown confirming AI focus and lack of display in first model; Google and Warby Parker announce partnership for competing AI glasses launching in 2026

- October 2025: Bloomberg reports Apple has paused development on a cheaper, lighter Vision Pro variant (codenamed N100) to redirect engineering resources toward smart glasses; Tim Cook is reportedly focused intensely on developing smart glasses

- May 2025: Bloomberg indicates late 2026 launch timeline for AI-focused smart glasses; Apple begins ramping up prototype production with overseas suppliers

Quick Specs at a Glance

| Release Date | Announcement expected late 2026, shipping possibly early 2027 |

| Price | Expected to be competitive with Meta Ray-Bans ($299-$379), possibly ranging up to $499-$1,000 |

| Display | First model will not have built-in display; display version expected 2028 |

| Processor | Custom chip codenamed N401, based on Apple Watch S-series architecture |

| Camera | Multiple cameras for photos, videos, and Visual Intelligence features |

| Audio | Built-in speakers and microphones |

| Connectivity | Bluetooth, requires paired iPhone |

| Key Features | AI assistant integration, iPhone accessory, fashion-focused design with multiple frame options |

Release Date and Availability

Bloomberg's Mark Gurman reports that Apple is expected to announce its smart glasses in late 2026, though actual shipping may not begin until early 2027. In May 2025, Gurman noted that Apple was "ramping up work" and planning to produce "large quantities of prototypes" by the end of that year, with a target of late 2026 release.

This accelerated timeline may be in response to Meta's breakout success with Ray-Ban smart glasses and Google's plans to enter the market with Gemini-powered glasses in 2026.

Apple is developing two variants of the glasses. The first model, developed under the codename N401 (previously N50), will be a display-less version targeting 2027 release with mass production beginning in Q2 2027, according to analyst Ming-Chi Kuo.

A second version with a built-in display could launch as late as 2028. This phased approach allows Apple to start with simpler AI glasses before introducing more advanced AR capabilities.

In October 2025, Bloomberg reported that Apple had paused development on a cheaper, lighter Vision Pro variant, codenamed N100 (also referred to as Vision Air), to redirect engineering resources toward smart glasses. This significant shift signals where Apple sees the real market opportunity.

Design and Display

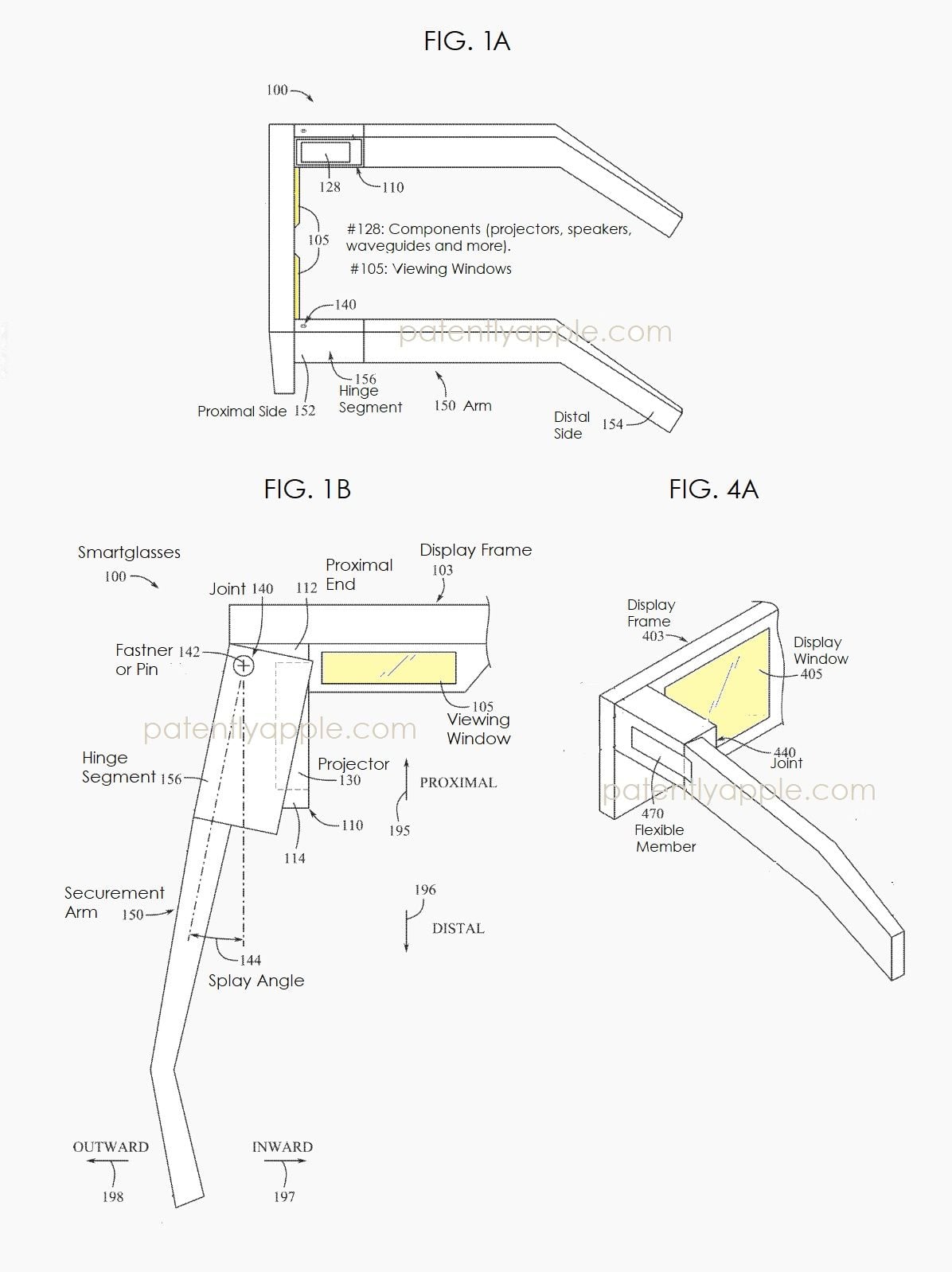

Recent patent filings reveal that Apple has been working on solutions for several critical design challenges. One patent describes a structural system that keeps key optical components in precise alignment while allowing adjustable, comfortable arms that resemble traditional eyewear.

Apple's approach isolates the optics in a fixed housing, ensuring display alignment stays consistent when users adjust the fit.

The company is planning to offer multiple frame and temple material options, positioning the smart glasses as much of a fashion accessory as the Apple Watch. Buyers will reportedly be able to choose their preferred color and frame style, selecting from both metal and plastic frame options.

The arms of Apple's smart glasses will reportedly feature forward-set hinges instead of their usual placement near the temples, with segmented construction that includes an elongated segment extending to the user's ears.

All components are designed to fit inside relatively thin eyewear arms while maintaining firm optical alignment.

Based on Apple's experience with Vision Pro, the company will likely support prescription lenses. Apple currently partners with ZEISS to offer custom optical inserts for Vision Pro, and a similar arrangement could extend to the smart glasses.

Processor and Performance

Apple is developing a custom chip codenamed N401 specifically for smart glasses. This chip is based on the S-series architecture used in Apple Watch but optimized even further for power efficiency. The processor will need to control multiple cameras and handle AI processing while maintaining all-day battery life.

Apple is expected to offload certain task processing to a paired iPhone, similar to how Apple Watch functions. This approach allows for more complex computations while keeping the glasses themselves lightweight and power-efficient.

The glasses will emphasize AI features over traditional computing power, with much of the heavy lifting handled by the connected iPhone.

Camera and AI System

The cameras in Apple's smart glasses will serve multiple purposes. They will be used for capturing photos and videos, but more importantly, they will enable Visual Intelligence features similar to those available on iPhone today.

The cameras will feed information to an AI assistant that can answer questions about what the wearer is seeing.

This AI will reportedly be able to control the glasses for tasks like snapping photos or playing music, plus provide directions and other contextual information.

The revamped Siri will be a key part of the glasses experience. Apple has rearchitected Siri with large language models, and a smarter, more capable version is expected to launch in spring 2026 with iOS 26.4.

This timing aligns with the glasses' development, as Apple needs a functional, next-generation Siri before the glasses can deliver on their potential. The upgraded Siri should be able to understand context, act across apps, and use the camera view to provide relevant assistance.

The glasses will reportedly include an LED indicator light that shows when the camera is active, addressing privacy concerns that have plagued other smart glasses products.

This feature is similar to the camera indicator lights found on laptops and other devices with built-in cameras.

Audio and Connectivity

Though Apple Glasses will work well with AirPods, they'll also include built-in speakers for media playback and Siri interactions. The audio system will need to balance sound quality with discretion, as glasses-worn speakers present unique acoustic challenges compared to earbuds or headphones.

Microphones will be integrated for voice commands and phone calls, with beamforming technology likely employed to isolate the wearer's voice from ambient noise.

This is particularly important for a device meant to be used in various environments, from quiet offices to noisy streets.

Connectivity will center around Bluetooth and potentially Ultra Wideband for precise location tracking relative to the paired iPhone. The glasses will be marketed as an iPhone accessory similar to Apple Watch, requiring an iPhone for setup and full functionality.

Battery and Charging

Battery life expectations for Apple Glasses remain unclear, but given their positioning as all-day wearables, they will likely need to last at least a full day of typical use.

The lack of a display in the first model should help conserve power, as displays are typically the most power-hungry component in wearable devices.

Charging will likely use a magnetic connector similar to Apple Watch or a proprietary charging case like AirPods. Wireless charging is also a possibility, though the glasses' form factor may present challenges for standard Qi charging pads.

Apple's focus on power efficiency in the custom N401 chip design suggests the company is prioritizing battery life, which will be crucial for a device meant to be worn throughout the day.

The glasses may include power-saving features like automatic sleep/wake detection based on whether they're being worn.

Software and Features

Apple Glasses will launch with deep integration into the iOS ecosystem, functioning as an iPhone accessory similar to Apple Watch.

The product will leverage Apple Intelligence for visual recognition, translation, and contextual assistance features.

The software experience will reportedly include hands-free control via Siri voice commands, with the AI assistant able to perform tasks based on what the wearer is seeing.

This could include identifying objects, translating text, providing directions, or answering questions about surroundings.

Privacy will be a major focus, with clear indicators when cameras or microphones are active and likely granular controls over what data is collected and processed. Apple's emphasis on on-device processing where possible aligns with their privacy-focused approach.

The glasses may include health and fitness tracking features, though likely more limited than Apple Watch given the different form factor and use case.

Basic activity tracking and possibly some novel health sensors could be included, building on Apple's expertise in health technology.

Price and Value

Pricing for Apple Glasses remains speculative. Meta's Ray-Ban smart glasses start at $299 (Gen 2 at $379), providing a competitive benchmark. The Meta Ray-Ban Display glasses with a built-in screen are priced at $799.

Earlier rumors from 2020 suggested a $499 price point from tipster Jon Prosser, but that report is now several years old and likely outdated given component cost changes and Apple's typical pricing strategy.

More recent analysis suggests the glasses could cost between $499 and $1,000 depending on features and materials.

The value proposition will center on the glasses' AI capabilities and fashion appeal rather than raw computing power. As an iPhone accessory, they'll need to provide enough utility to justify their cost while remaining accessible to a broad audience.

Apple may offer different price tiers based on frame materials and lens options, similar to Apple Watch editions.

Competition

The smart glasses market is heating up considerably. Meta has sold more than 2 million pairs of Ray-Ban smart glasses and plans to increase annual production capacity to 10 million units by the end of 2026.

In September 2025, Meta launched the Ray-Ban Meta Gen 2 at $379 and the Ray-Ban Display glasses with a built-in HUD at $799, including a neural wristband for gesture control.

Google has also reentered the market. In December 2025, Google and Warby Parker announced a partnership to launch AI-powered smart glasses in 2026, built on Android XR and powered by Gemini AI. Google is developing two types of devices: AI glasses for screen-free assistance and display glasses with in-lens information.

Apple's entry will likely command a premium price while offering deeper integration with the Apple ecosystem and potentially more advanced AI features once the upgraded Siri launches.

Should You Wait or Buy Now?

For most consumers, waiting for Apple's official smart glasses announcement makes sense, especially if you're already invested in the Apple ecosystem.

Current smart glasses options from Meta and others offer similar basic functionality but lack the deep iOS integration that Apple's version will likely provide.

If you need smart glasses immediately, Meta's Ray-Ban smart glasses represent the most polished option on the market, with good AI features and respectable battery life.

However, they lack the seamless Apple ecosystem integration that will likely be a key selling point for Apple's version.

For iPhone users who value ecosystem integration and are willing to wait for potentially more advanced AI features, holding off for Apple's official offering is the prudent choice.

The expected late 2026 announcement means the wait shouldn't be too long, and Apple's track record with first-generation products suggests they'll deliver a polished experience despite being a new product category.

Those who prioritize fashion may want to wait to see Apple's frame options and materials, as the company is reportedly planning multiple styles to appeal to different tastes.

The Bottom Line

Apple's smart glasses represent the company's next major wearable category, building on the success of Apple Watch and AirPods while addressing the growing market for AI-powered eyewear.

With an expected late 2026 announcement and focus on AI features rather than augmented reality displays, Apple is taking a pragmatic approach that leverages existing iPhone capabilities while establishing a foundation for more advanced future versions.

The most exciting aspects are the deep AI integration and fashion-focused design, which could make smart glasses more appealing to mainstream consumers than previous attempts from Google and others.

The biggest unknowns remain pricing, battery life, and specific AI capabilities, which will determine whether these glasses become must-have accessories or niche products.

We'll likely know more as we approach Apple's typical event schedule in 2026, where the company could choose to announce the glasses at WWDC or alongside new iPhones.

Until then, the steady stream of leaks and patent filings will continue to paint an increasingly clear picture of what to expect from Apple's entry into the smart glasses market.