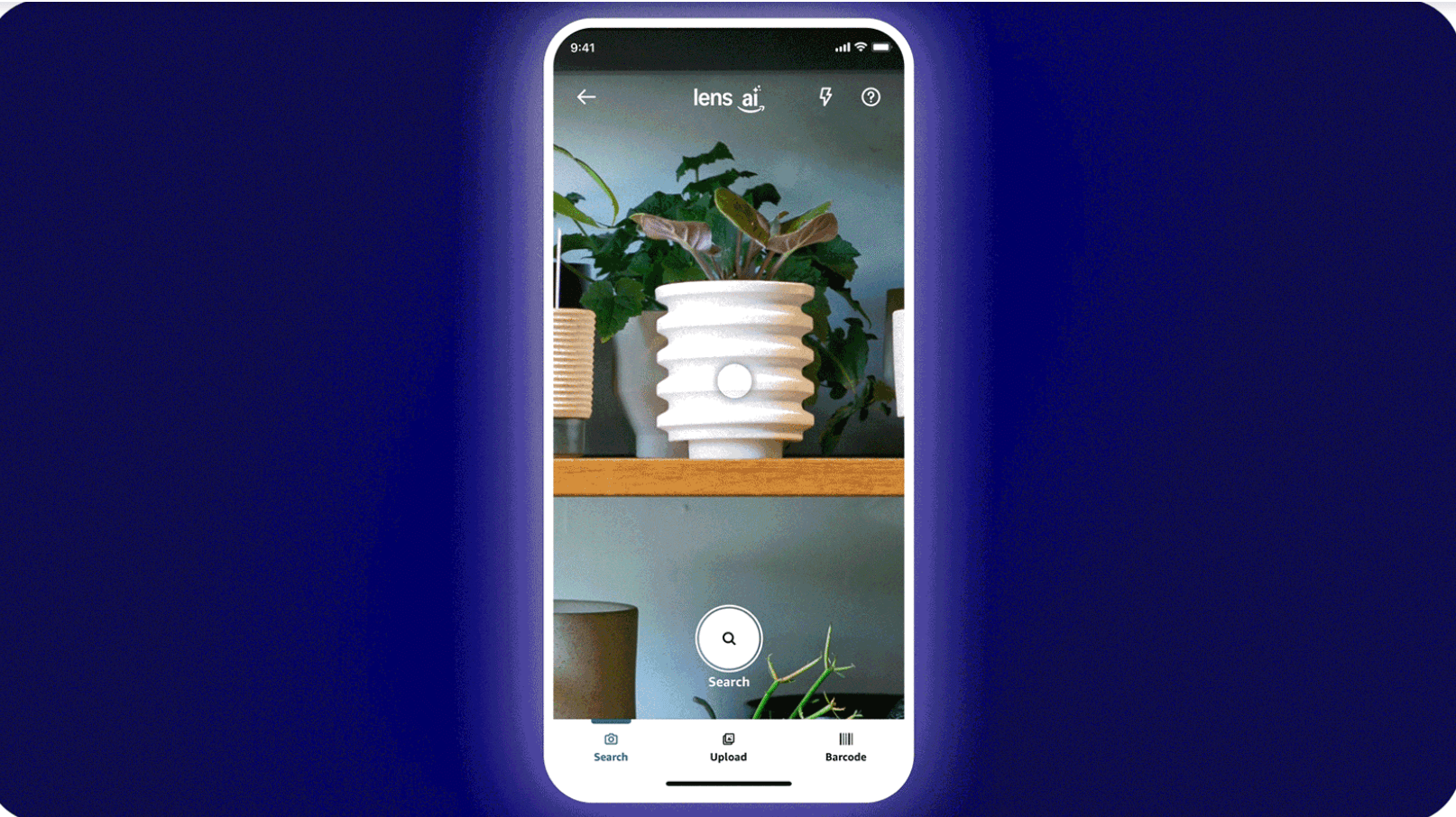

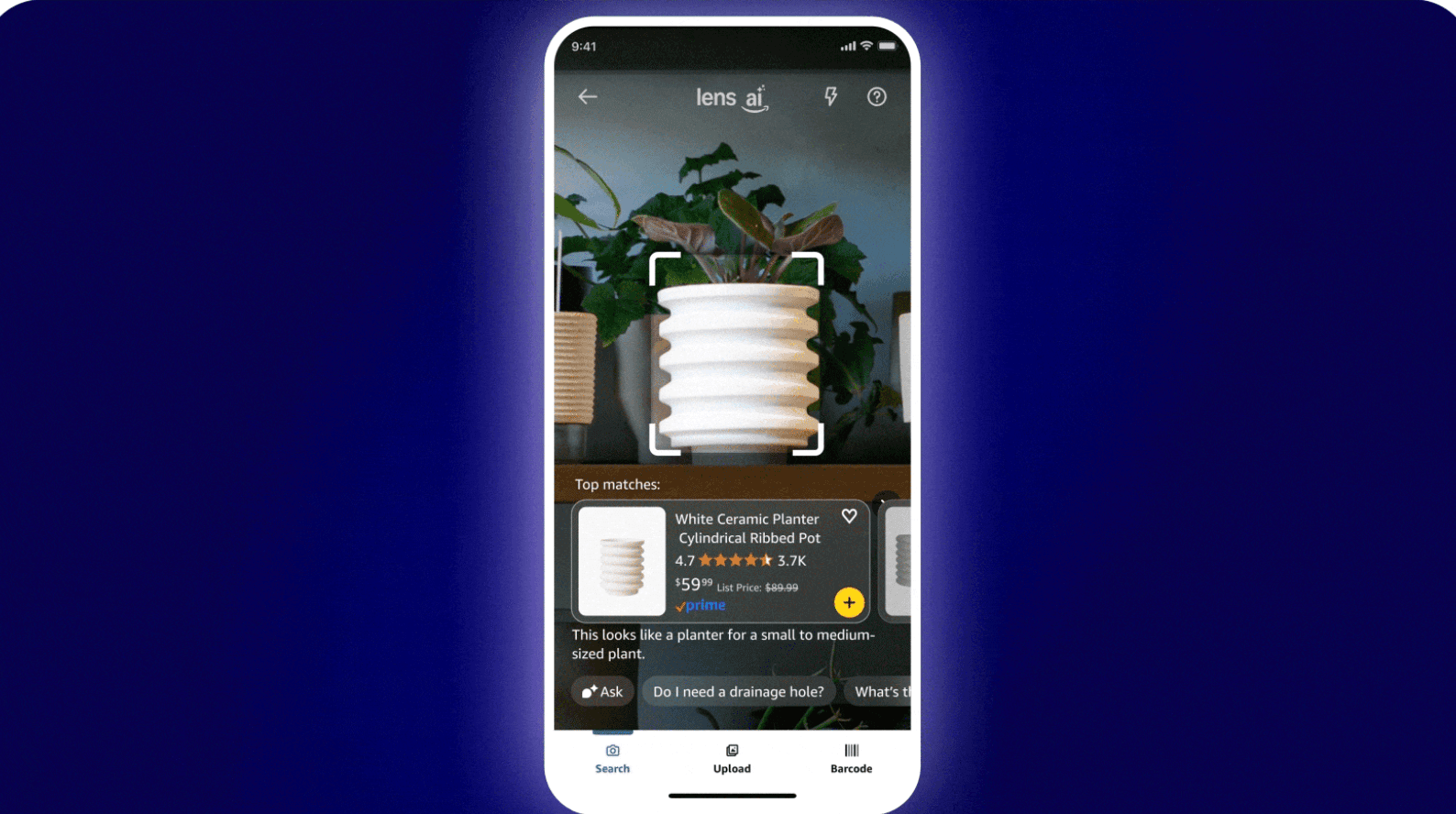

Picture this: You're walking past a neighbor's gorgeous outdoor planter, and instead of awkwardly asking where they bought it, you just point your phone at it. Instantly, Amazon shows you dozens of similar options, complete with prices and one-tap purchasing. That's the promise of Lens Live, Amazon's latest AI-powered visual search feature that's rolling out to tens of millions of iOS users starting today.

You can also set us as a preferred source in Google Search/News by clicking the button.

The retail giant's new tool transforms the traditional Amazon Lens experience into something that feels genuinely futuristic. Rather than the old snap-and-wait approach, Lens Live begins scanning products the moment you open the camera, displaying matching items in a swipeable carousel at the bottom of your screen. It's visual search that actually keeps up with how we naturally browse the world around us.

What sets this apart from previous visual search attempts isn't just the speed - it's the integration with Rufus, Amazon's conversational AI shopping assistant that launched early last year. While you're pointing your camera at that ceramic planter, Rufus chimes in with contextual suggestions and summaries about what makes specific products worth considering. The AI assistant can answer questions about materials, dimensions, or care instructions without forcing you to leave the camera view.

Under the hood, Lens Live represents a significant technical achievement in mobile AI. The system runs a lightweight computer vision model directly on your device, identifying objects in real-time before matching them against billions of products in Amazon's catalog. This on-device processing means the initial detection happens instantly, without the lag typically associated with cloud-based image recognition.

Amazon's engineering team built the feature on AWS infrastructure, leveraging OpenSearch and SageMaker to deploy the necessary machine learning models at scale. The visual embedding model that powers the matching process has been trained specifically to handle the chaos of real-world environments - varying lighting conditions, partial views, and products at odd angles that would typically confuse traditional image recognition systems.

The integration with Rufus adds another layer of complexity. The shopping assistant, which Amazon has been steadily rolling out globally, uses large language models to understand context and generate relevant product insights. When combined with Lens Live, it creates a shopping experience that feels more like having a knowledgeable friend helping you browse rather than just another search interface.

For users worried about the traditional Lens features disappearing, Amazon's keeping all the existing options intact. You can still take a photo, upload an existing image, or scan barcodes if that's your preference. The company seems to understand that different shopping scenarios call for different approaches - sometimes you want instant results while actively shopping, other times you're researching a photo you took earlier.

The rollout strategy suggests Amazon is being cautious with this launch. Starting with tens of millions of iOS users might sound massive, but it's actually a controlled deployment for a company of Amazon's scale. The gradual expansion allows them to gather feedback and refine the AI models based on real-world usage patterns. Android users will have to wait a bit longer, though Amazon promises the feature is coming to all U.S. customers in the coming months.

This launch comes at an interesting time for AI-powered shopping tools. The retail industry has been racing to integrate generative AI features, with everyone from eBay to smaller startups launching their own visual search and AI assistant tools. Amazon's advantage lies in its massive product catalog and the shopping data to train its models on actual purchasing behavior rather than just browsing patterns.

The real test will be whether Lens Live actually changes shopping behavior or becomes another rarely-used feature buried in the app. Early adopters with access to the feature report that the real-time scanning feels surprisingly natural, especially for home decor and fashion items where visual similarity matters more than exact matches. The ability to add items directly to your cart with a tap or save them to wishlists without leaving the camera view removes significant friction from the discovery-to-purchase pipeline.

Privacy-conscious users might appreciate that the initial object detection happens on-device, though the actual product matching and Rufus interactions still require cloud processing. Amazon hasn't detailed what visual data is stored or how it's used to improve the service, which will likely become a bigger conversation as more users gain access to the feature.

For Amazon, Lens Live represents more than just a cool tech demo - it's a strategic play to capture shopping moments that currently escape their ecosystem. Every time someone sees something they like in the real world but doesn't know how to search for it, that's a potential sale lost to friction. By making visual search instantaneous and conversational, they're betting they can convert more of those fleeting shopping impulses into actual purchases.

The feature arrives as Amazon continues its aggressive push into AI-powered shopping experiences, joining other recent launches like personalized shopping prompts and AI-generated product descriptions. Together, these tools paint a picture of Amazon's vision for the future of e-commerce: less typing, more discovering, and AI assistants that actually understand what you're looking for even when you can't quite describe it yourself.