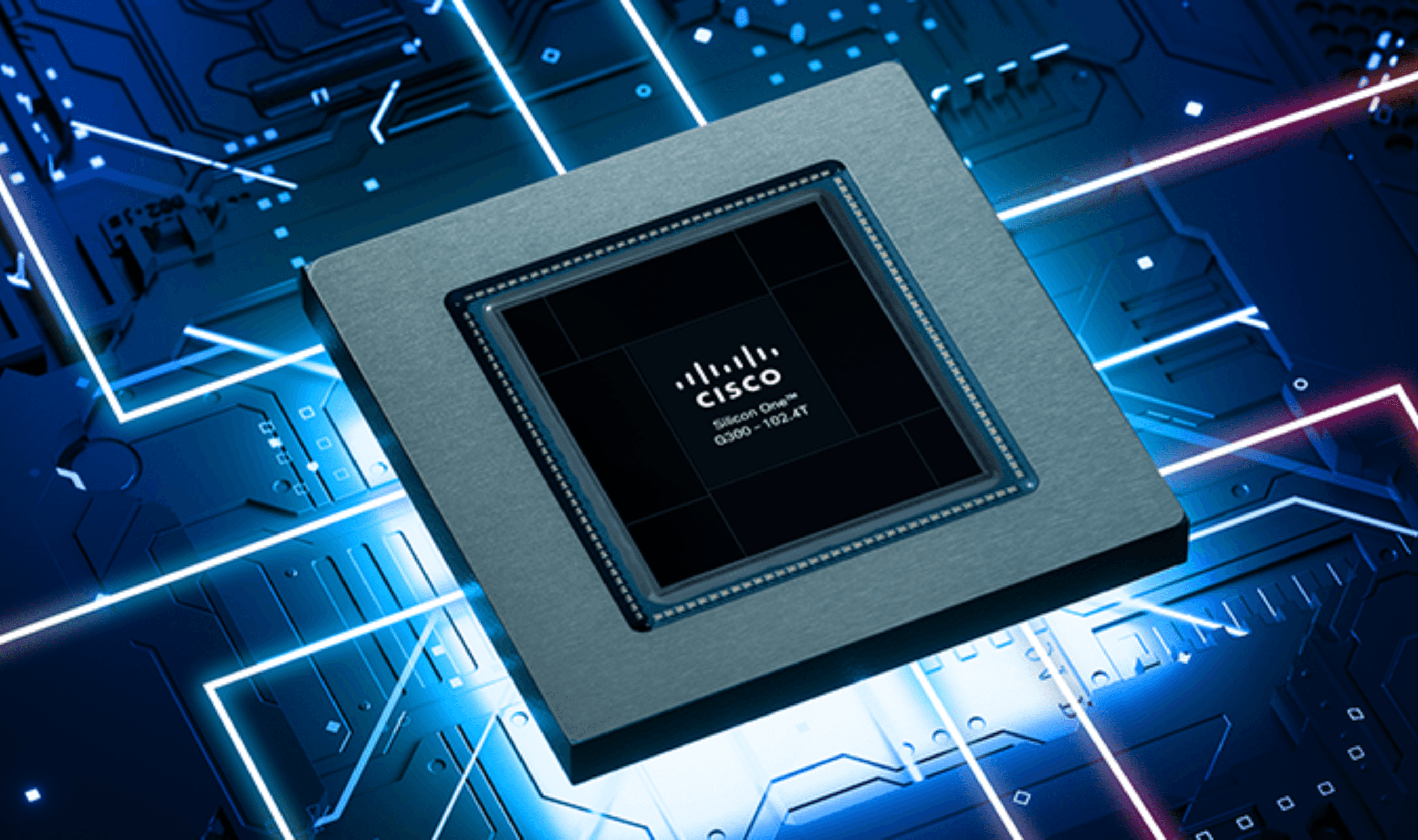

Cisco unveiled its Silicon One G300 switch chip on Tuesday, directly challenging Broadcom and Nvidia in the AI networking market. The 102.4 Tbps chip targets the $600 billion AI infrastructure spending boom with a second-half 2026 release.

The G300 delivers 28% faster AI computing tasks through automatic data rerouting within microseconds. Cisco's "shock absorber" features prevent network slowdowns during sudden data traffic surges, a critical requirement for AI training clusters.

Manufactured using Taiwan Semiconductor Manufacturing Co's 3-nanometer process, the chip will power new Cisco N9000 and 8000 systems. These systems feature 100% liquid cooling designs that improve energy efficiency by nearly 70% compared to previous generations.

Cisco's entry intensifies competition with Broadcom's Tomahawk 6 and Nvidia's Spectrum-X Ethernet Photonics. Nvidia unveiled its own networking chip last month as part of a six-chip AI system, signaling the strategic importance of networking in AI infrastructure.

This comes as other AI companies explore alternatives to Nvidia's chips for faster inference speeds.

The G300 supports deployments of up to 128,000 GPUs using just 750 switches, addressing the scaling challenges of massive AI clusters.

New systems supporting Linear Pluggable Optics reduce optical module power consumption by 50% compared to retimed modules. This contributes to a 30% reduction in overall switch power consumption for more sustainable operations.

Cisco's Intelligent Collective Networking technology delivers 33% increased network utilization alongside the 28% reduction in job completion time. The company positions this as critical for maximizing GPU utilization in AI data centers.

The announcement follows Cisco's October 2025 introduction of the P200 routing chip and 8223 system for connecting AI data centers across hundreds of miles. Both developments target the growing need for distributed AI infrastructure beyond single data center limits.

Cisco expects initial availability through its N9000 and 8000 product lines, both equipped with 64 1.6 Tbps ports. The company will also offer the technology in modular platforms and disaggregated chassis for architectural consistency across network sizes.

The G300 chip's 102.4 Tbps capacity and ability to support 128,000 GPU deployments position Cisco for the AI infrastructure market, with the chip scheduled for release in the second half of 2026.